Reference15r1:Concept App Service myApps Assistant: Difference between revisions

First Draft |

m added a remark for the next version 16r1 |

||

| (57 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

[[Category:Concept|Apps]] | [[Category:Concept|Apps]] | ||

[[Category:Concept App Service myApps Assistant]] | [[Category:Concept App Service myApps Assistant]] | ||

| Line 8: | Line 7: | ||

== Overview == | == Overview == | ||

The myApps Assistant App offers an interface for other apps to access a remote large language model (LLM). This might be a locally hosted open-source model like | The myApps Assistant App offers an interface for other apps to access a remote large language model (LLM). This might be a locally hosted open-source model like '''mixtral''' or '''deepseek-r1''' or a model hosted by providers such as openAI (e.g., gpt-3.5-turbo, gpt-4o...) or mistral '''('''https://mistral.ai/''')'''. Other apps can integrate the assistant service via the [https://sdk.innovaphone.com/15r1/web1/com.innovaphone.assistant/com.innovaphone.assistant.htm local assistant JavaScript API] in the client and thus offer the response of the LLM. | ||

The service functions as an intermediary and contains a corresponding backend. Therefore, it is required to self-host an LLM Service or possess an account with an external service provider. In the event of the latter, you can configure your API key in the PBX Manager for this app service, if needed. | The service functions as an intermediary and contains a corresponding backend. Therefore, it is required to self-host an LLM Service or possess an account with an external service provider. In the event of the latter, you can configure your API key in the '''Settings App''' (former PBX Manager Plugin) for this app service, if needed. | ||

Currently, only the openAI API Chat Completions API v1 is implemented (which is also supported by several open source projects e.g. https://ollama.com/), but more API implementations are likely to follow. | Currently, only the openAI API Chat Completions API v1 is implemented (which is also supported by several open source projects e.g. https://ollama.com/), but more API implementations are likely to follow. | ||

== Licensing == | == Licensing == | ||

An appropriate license ''App(innovaphone-assistant)'' must be installed on the PBX to enable the App for specified users. | |||

The License can be assigned directly to a specific User Object or via a Config Template. | |||

== Installation == | == Installation == | ||

Go to the PBX manager and open the '''"AP app installer"''' plugin. On the right panel, the App Store will be shown. ''Hint : if you access it for the first time, you will need to accept the "Terms of Use of the innovaphone App Store"'' | Go to the '''Settings App''' (PBX manager) and open the '''"AP app installer"''' plugin. On the right panel, the App Store will be shown. ''Hint : if you access it for the first time, you will need to accept the "Terms of Use of the innovaphone App Store"'' | ||

* In the search field located on the top right corner of the store, search for '''"myApps Assistant"''' and click on it | * In the search field located on the top right corner of the store, search for '''"myApps Assistant"''' and click on it | ||

* Select the proper firmware version, for example '''"Version 15r1"''' and click on install | * Select the proper firmware version, for example '''"Version 15r1"''' and click on install | ||

* Tick "I accept the terms of use" and continue by clicking on the install yellow button | * Tick "I accept the terms of use" and continue by clicking on the install yellow button | ||

* Wait until the install has been finished | * Wait until the install has been finished | ||

* Close and reopen the PBX manager again in order to refresh the list of the available colored AP plugin | * Close and reopen the '''Settings App''' (PBX manager) again in order to refresh the list of the available colored AP plugin | ||

* Click on the '''"AP assistant"''' and click on '''" + Add an App"''' and then on the '''"Assistant API"''' button. | * Click on the '''"AP assistant"''' and click on '''" + Add an App"''' and then on the '''"Assistant API"''' button. | ||

* Enter a '''"Name"''' that is used as display name ''(all character allowed)'' for it and the '''"SIP"''' name that is the administrative field ''(no space, no capital letters)''. ''e.g : Name: Assistant API, SIP: assistant-api'' | * Enter a '''"Name"''' that is used as display name ''(all character allowed)'' for it and the '''"SIP"''' name that is the administrative field ''(no space, no capital letters)''. ''e.g : Name: Assistant API, SIP: assistant-api'' | ||

| Line 29: | Line 30: | ||

* Tick the appropriate template to distribute the App (the app is needed at every user object from any user who wants to use the assistant API) | * Tick the appropriate template to distribute the App (the app is needed at every user object from any user who wants to use the assistant API) | ||

* Click OK to save the settings and a green check mark will be shown to inform you that the configuration is good | * Click OK to save the settings and a green check mark will be shown to inform you that the configuration is good | ||

If you have the license for the Assistant App you should repeat the above mentioned last five steps for that app ('''Assistant''') too. | |||

== Assistant App == | |||

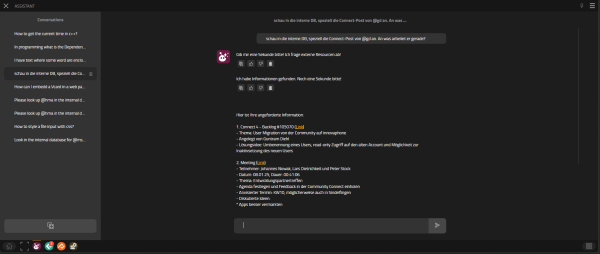

[[File:Reference15r1ConceptAppServiceMyAppsAssistant-AssistantUI-2.png|thumb|600x600px|/Reference15r1ConceptAppServiceMyAppsAssistant-AssistantUI-2.png|/Reference15r1ConceptAppServiceMyAppsAssistant-AssistantUI-2.png]] | |||

Since version 15r1, the application also offers a user interface, where conversations can be held with the connected large language model. These conversations are stored in a database managed by the service. | |||

There are a few parameters one can manage from within the hamburger menu. These are: | |||

* '''Send on Enter''' - You might choose that option if you want a message to be send to the LLM on pressing ENTER. Alternatively it would be CTRL+ENTER | |||

* '''Don't use an interface to other apps (no system prompt)''' - To allow the application access to myApps resources (such as the contacts and connect databases, call list, or the softphone app), a specially designed system prompt is sent to the model at the beginning of each conversation. If you do not require this feature and wish to reduce costs or processing time, you can disable the system prompt by checking the appropriate box. | |||

* '''Offline mode (Conversations are stored on your device)''' - Normally, all your conversations are stored in the central service's database, which enables you to retrieve them from any device you are logged into. However, if your internet connection is interrupted, you will not be able to access them until the connection is restored. To address this issue, you may choose to store your conversation data on your device, allowing you to access it even without an internet connection. However, you should not enable this feature when using a hardware device that is not your own. If you do, make sure to disable it afterwards to delete any locally stored data. Note that deleting locally stored conversations is not synchronized across all devices if they are all in Offline Mode! | |||

====== Currently implemented myApps features ====== | |||

The Assistant App integrates into the myApps platform and offers services from other apps via the [https://sdk.innovaphone.com/15r1/web1/com.innovaphone.assistant.plugin/com.innovaphone.assistant.plugin.htm local assistant.plugin JavaScript API]. This will be extended and improved in future releases. You may try the following prompts: | |||

* Ask for the number of a contact stored in the contacts database "Can you please find the number of Sarah Sample!" | |||

* Get information from Connect and Projects* "Please look up #AI in the '''internal''' '''database'''! Then tell me how we are planning to use it" | |||

* Get your call list "What are my recent calls?" | |||

* Calling someone "Can you please call Sarah Sample!" | |||

* Get the current time and date "What time and data is it right now?" | |||

* Start a new conversation "Please start a new conversation!" | |||

For the above mentioned prompts the following APIs should be available to the user: | |||

The | * com.innovaphone.assistant.plugin | ||

* com.innovaphone.search | |||

* com.innovaphone.calllist | |||

For now only keyword search is possible. In order to make the LLM understand that you want to search in Connect and Projects Databases you need to mention that you want to use the '''internal databases!''' Better use two sentences to make the LLM understand better. The search results are the same as those you would get from the '''myApps Search App'''. Only the first 40 matches of the keywords are considered and from these only the first 10k characters. | |||

<br>The prompts mentioned above may or may not work depending on the connected large language model. We cannot guarantee that the results will always be satisfactory, but as the application always sends the entire conversation to the model, it is recommended to start a new conversation if different topics are not directly related to each other. | |||

The prompts were successfully tested on the following models: | |||

* mixtral-q6-8192 | |||

* mixtral | |||

* deepseek-r1:14b | |||

* deepseek-r1:32b | |||

* deepseek-r1:70b | |||

* gpt-3.5-turbo | |||

* gpt-4o | |||

There are certainly many more. But currently only models with parameter sizes of more than 14 billion (14b) correctly understood the system prompt. Larger models perform better, but are slower and more expensive. | |||

[[File:Reference15r1 Concept App Service Assistant App-Config-2.png|thumb|/Reference15r1_Concept_App_Service_Assistant_App-Config-2.png|/Reference15r1_Concept_App_Service_Assistant_App-Config-2.png]] | |||

== myApps Assistant - App Service == | == myApps Assistant - App Service == | ||

| Line 43: | Line 84: | ||

* Translations using the LLM (not yet fully tested) | * Translations using the LLM (not yet fully tested) | ||

It can be configured in the PBX Manager App | It can be configured in the '''Settings App''' (PBX Manager App) [[Reference15r1:Apps/PbxManager/App_myApps_Assistant]] | ||

In order to make the app connect to your LLM model provider you need to configure a few fields. | In order to make the app connect to your LLM model provider you need to configure a few fields. | ||

* Remote Service URL - The URL where the remote service interacting with the LLM is hosted. If you are using a model hosted by openAI this would probably be: https://api.openai.com/v1/chat/completions | * '''Remote Service URL''' - The URL where the remote service interacting with the LLM is hosted. If you are using a model hosted by openAI this would probably be: https://api.openai.com/v1/chat/completions | ||

* LLM | * '''LLM (model)''' - The actual model you intend to use. There are a couple of open source models you could use (mixtral, deepseek-r1:14b...). If you intend to use openAI models this could be something like gpt-3.5-turbo or gpt-4o | ||

* API -Key - If you are not using a self-hosted model you might need an API-Key in order to make successful HTTP Requests to your provider. This key will be delivered by your LLM | * '''API -Key''' - If you are not using a self-hosted model you might need an API-Key in order to make successful HTTP Requests to your provider. This key will be delivered by your LLM provider (e.g. openAI https://platform.openai.com/signup ) | ||

Finding the right model name might sometimes be difficult. You could make an API call to your ai model service provider like that: | |||

curl -H "Authorization: Bearer YOUR-API-KEY" --get <nowiki>http://YOUR-MODEL-SERICE-PROVIDER-ADDRESS/v1/models</nowiki> | |||

The model service provider address could be the address of your ollama server, ''openai.inference.de-txl.ionos.com'' for IONOS or ''api.openai.com'' for openAI. (That API call will be implemented in version 16r1 making the configuration easier) | |||

=== Language recognition === | === Language recognition === | ||

An | An extra library is used to recognize the language used in a string. This recognition is also used for translations to avoid unnecessary translations (e.g. EN to EN translations are prevented). This payload will not be sent to any backend service, thus avoiding unnecessary costs.<br> | ||

A few features exist which should make sure, a recognized language is only considered, if there is enough confidence: | A few features exist which should make sure, a recognized language is only considered, if there is enough confidence: | ||

* Strings shorter than 6 words are skipped, since the chance for false positives are relatively high | * Strings shorter than 6 words are skipped, since the chance for false positives are relatively high | ||

* Strings in which are not enough word matches to be confident for any language will be skipped, also because of high chances for false positives | * Strings in which are not enough word matches to be confident for any language will be skipped, also because of high chances for false positives | ||

* Strings in which are more than one language with a nearly similar amount of word matches will be skipped | * Strings in which are more than one language with a nearly similar amount of word matches will be skipped | ||

=== Caching === | === Caching === | ||

| Line 68: | Line 109: | ||

=== Translations against the backend === | === Translations against the backend === | ||

Translation requests that cannot be handled by the local cache are forwarded to the configured translation backend. After successful translation, the translated version is kept in the cache for future requests. | Translation requests that cannot be handled by the local cache are forwarded to the configured translation backend, which is the LLM (there is only one) you configured in the '''Settings App''' (former PBX Manager Plugin). After successful translation, the translated version is kept in the cache for future requests. Currently no myApps applications are using the API [https://sdk.innovaphone.com/15r1/web1/com.innovaphone.assistant/com.innovaphone.assistant.htm local assistant JavaScript API] for translations. But developers may use it if a LLM is configured and no extra service provider should be used. | ||

== Troubleshooting == | == Troubleshooting == | ||

| Line 78: | Line 117: | ||

== Related Articles == | == Related Articles == | ||

* [https://sdk.innovaphone.com/15r1/web1/com.innovaphone.assistant/com.innovaphone.assistant.htm SDK Documentation - Assistant API] | * [https://sdk.innovaphone.com/15r1/web1/com.innovaphone.assistant/com.innovaphone.assistant.htm SDK Documentation - Assistant API] | ||

* [[Howto:Setup a LLM Server]] | |||

Latest revision as of 10:27, 30 May 2025

Applies To

- innovaphone from version 15r1

Overview

The myApps Assistant App offers an interface for other apps to access a remote large language model (LLM). This might be a locally hosted open-source model like mixtral or deepseek-r1 or a model hosted by providers such as openAI (e.g., gpt-3.5-turbo, gpt-4o...) or mistral (https://mistral.ai/). Other apps can integrate the assistant service via the local assistant JavaScript API in the client and thus offer the response of the LLM.

The service functions as an intermediary and contains a corresponding backend. Therefore, it is required to self-host an LLM Service or possess an account with an external service provider. In the event of the latter, you can configure your API key in the Settings App (former PBX Manager Plugin) for this app service, if needed.

Currently, only the openAI API Chat Completions API v1 is implemented (which is also supported by several open source projects e.g. https://ollama.com/), but more API implementations are likely to follow.

Licensing

An appropriate license App(innovaphone-assistant) must be installed on the PBX to enable the App for specified users.

The License can be assigned directly to a specific User Object or via a Config Template.

Installation

Go to the Settings App (PBX manager) and open the "AP app installer" plugin. On the right panel, the App Store will be shown. Hint : if you access it for the first time, you will need to accept the "Terms of Use of the innovaphone App Store"

- In the search field located on the top right corner of the store, search for "myApps Assistant" and click on it

- Select the proper firmware version, for example "Version 15r1" and click on install

- Tick "I accept the terms of use" and continue by clicking on the install yellow button

- Wait until the install has been finished

- Close and reopen the Settings App (PBX manager) again in order to refresh the list of the available colored AP plugin

- Click on the "AP assistant" and click on " + Add an App" and then on the "Assistant API" button.

- Enter a "Name" that is used as display name (all character allowed) for it and the "SIP" name that is the administrative field (no space, no capital letters). e.g : Name: Assistant API, SIP: assistant-api

- Fill in the fields for "Remote Service URL", "LLM Model" and if necessary "API Key" (for more information about these fields see below)

- Tick the appropriate template to distribute the App (the app is needed at every user object from any user who wants to use the assistant API)

- Click OK to save the settings and a green check mark will be shown to inform you that the configuration is good

If you have the license for the Assistant App you should repeat the above mentioned last five steps for that app (Assistant) too.

Assistant App

Since version 15r1, the application also offers a user interface, where conversations can be held with the connected large language model. These conversations are stored in a database managed by the service.

There are a few parameters one can manage from within the hamburger menu. These are:

- Send on Enter - You might choose that option if you want a message to be send to the LLM on pressing ENTER. Alternatively it would be CTRL+ENTER

- Don't use an interface to other apps (no system prompt) - To allow the application access to myApps resources (such as the contacts and connect databases, call list, or the softphone app), a specially designed system prompt is sent to the model at the beginning of each conversation. If you do not require this feature and wish to reduce costs or processing time, you can disable the system prompt by checking the appropriate box.

- Offline mode (Conversations are stored on your device) - Normally, all your conversations are stored in the central service's database, which enables you to retrieve them from any device you are logged into. However, if your internet connection is interrupted, you will not be able to access them until the connection is restored. To address this issue, you may choose to store your conversation data on your device, allowing you to access it even without an internet connection. However, you should not enable this feature when using a hardware device that is not your own. If you do, make sure to disable it afterwards to delete any locally stored data. Note that deleting locally stored conversations is not synchronized across all devices if they are all in Offline Mode!

Currently implemented myApps features

The Assistant App integrates into the myApps platform and offers services from other apps via the local assistant.plugin JavaScript API. This will be extended and improved in future releases. You may try the following prompts:

- Ask for the number of a contact stored in the contacts database "Can you please find the number of Sarah Sample!"

- Get information from Connect and Projects* "Please look up #AI in the internal database! Then tell me how we are planning to use it"

- Get your call list "What are my recent calls?"

- Calling someone "Can you please call Sarah Sample!"

- Get the current time and date "What time and data is it right now?"

- Start a new conversation "Please start a new conversation!"

For the above mentioned prompts the following APIs should be available to the user:

- com.innovaphone.assistant.plugin

- com.innovaphone.search

- com.innovaphone.calllist

For now only keyword search is possible. In order to make the LLM understand that you want to search in Connect and Projects Databases you need to mention that you want to use the internal databases! Better use two sentences to make the LLM understand better. The search results are the same as those you would get from the myApps Search App. Only the first 40 matches of the keywords are considered and from these only the first 10k characters.

The prompts mentioned above may or may not work depending on the connected large language model. We cannot guarantee that the results will always be satisfactory, but as the application always sends the entire conversation to the model, it is recommended to start a new conversation if different topics are not directly related to each other.

The prompts were successfully tested on the following models:

- mixtral-q6-8192

- mixtral

- deepseek-r1:14b

- deepseek-r1:32b

- deepseek-r1:70b

- gpt-3.5-turbo

- gpt-4o

There are certainly many more. But currently only models with parameter sizes of more than 14 billion (14b) correctly understood the system prompt. Larger models perform better, but are slower and more expensive.

myApps Assistant - App Service

The App Service performs tasks in the following areas:

- Question relay to an LLM

- Language recognition

- Caching

- Translations using the LLM (not yet fully tested)

It can be configured in the Settings App (PBX Manager App) Reference15r1:Apps/PbxManager/App_myApps_Assistant

In order to make the app connect to your LLM model provider you need to configure a few fields.

- Remote Service URL - The URL where the remote service interacting with the LLM is hosted. If you are using a model hosted by openAI this would probably be: https://api.openai.com/v1/chat/completions

- LLM (model) - The actual model you intend to use. There are a couple of open source models you could use (mixtral, deepseek-r1:14b...). If you intend to use openAI models this could be something like gpt-3.5-turbo or gpt-4o

- API -Key - If you are not using a self-hosted model you might need an API-Key in order to make successful HTTP Requests to your provider. This key will be delivered by your LLM provider (e.g. openAI https://platform.openai.com/signup )

Finding the right model name might sometimes be difficult. You could make an API call to your ai model service provider like that:

curl -H "Authorization: Bearer YOUR-API-KEY" --get http://YOUR-MODEL-SERICE-PROVIDER-ADDRESS/v1/models

The model service provider address could be the address of your ollama server, openai.inference.de-txl.ionos.com for IONOS or api.openai.com for openAI. (That API call will be implemented in version 16r1 making the configuration easier)

Language recognition

An extra library is used to recognize the language used in a string. This recognition is also used for translations to avoid unnecessary translations (e.g. EN to EN translations are prevented). This payload will not be sent to any backend service, thus avoiding unnecessary costs.

A few features exist which should make sure, a recognized language is only considered, if there is enough confidence:

- Strings shorter than 6 words are skipped, since the chance for false positives are relatively high

- Strings in which are not enough word matches to be confident for any language will be skipped, also because of high chances for false positives

- Strings in which are more than one language with a nearly similar amount of word matches will be skipped

Caching

To save costs, all translations are cached in the App Services database. If a string is translated multiple times, only the first translation is carried out by the backend and the translated version is saved in the cache. A second translation is therefore free of charge and performs better.

Translations against the backend

Translation requests that cannot be handled by the local cache are forwarded to the configured translation backend, which is the LLM (there is only one) you configured in the Settings App (former PBX Manager Plugin). After successful translation, the translated version is kept in the cache for future requests. Currently no myApps applications are using the API local assistant JavaScript API for translations. But developers may use it if a LLM is configured and no extra service provider should be used.

Troubleshooting

To troubleshoot this App Service, you need the traceflags App, Database, HTTP-Client in your App instance.